要求:爬百度某个贴吧,可以设置爬取的页数,将每个帖子和回复存入mysql数据库。

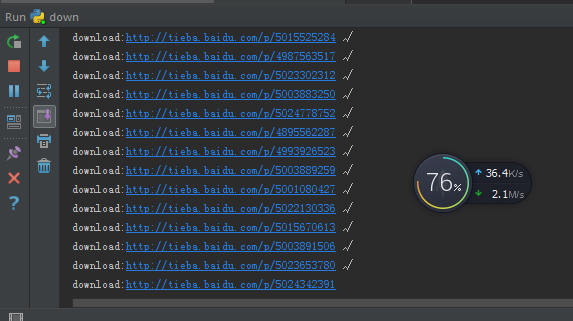

运行效果如下,合理设置threadNum和maxURL可以把网速顶满~

数据库结构:

#

# Structure for table "tieba"

#

CREATE TABLE `tieba` (

`Id` int(11) NOT NULL AUTO_INCREMENT,

`url` varchar(255) DEFAULT NULL,

`name` varchar(255) DEFAULT NULL,

`context` text,

PRIMARY KEY (`Id`)

) ENGINE=MyISAM AUTO_INCREMENT=958 DEFAULT CHARSET=utf8;

#

# Structure for table "url"

#

CREATE TABLE `url` (

`id` int(11) NOT NULL AUTO_INCREMENT,

`url` varchar(50) NOT NULL,

`title` varchar(255) DEFAULT NULL,

`author` varchar(255) DEFAULT NULL,

`time` varchar(32) DEFAULT NULL,

`status` tinyint(3) NOT NULL DEFAULT '0',

PRIMARY KEY (`id`)

) ENGINE=MyISAM AUTO_INCREMENT=51 DEFAULT CHARSET=utf8;

# coding:utf-8

import MySQLdb

import re

import urllib2

import threading

import time

db = MySQLdb.connect("10.255.46.101", "py", "py", "py")

cursor = db.cursor()

def downloadURL(bar,maxpage=1):

print "start download URL"

cursor.execute("truncate table url")

cursor.execute("truncate table tieba")

p = r'<a href="(.*?)" title="(.*?)" target="_blank" class="j_th_tit ">(?:[\s\S]*?)title="主题作者: (.*?)"(?:[\s\S]*?)title="创建时间">(.*?)</span>'

p = p.replace('\n','\r\n')

for page in range(maxpage):

url = "http://tieba.baidu.com/f?kw=" + bar + "&ie=utf-8&pn=" + str(page*50)

print url

html = urllib2.urlopen(url).read()

print len("page:"+str(page))

for url, title, author, time in re.findall(p, html):

cursor.execute("insert into url (title,url,author,time) values('%s','%s','%s','%s')" % (

title, 'http://tieba.baidu.com' + url, author, time))

def getURL(limit):

if cursor.execute("select url from url where status = 0 limit "+ str(limit)):

urls = []

for row in cursor.fetchall():

cursor.execute("update url set status = 1 where url = %s",args=(row[0],))

urls.append(row[0])

return urls

else:

return None

def download_tie():

while True:

if lock.acquire():

url_list = getURL(maxURL)

lock.release()

if not url_list:

break

print url_list

for url in url_list:

print "download:" + url,

try:

html = urllib2.urlopen(url).read()

p = r'<a (?:[\s\S]*?)class="p_author_name j_user_card" (?:[\s\S]*?)>(.*?)</a>(?:[\s\S]*?) class="d_post_content j_d_post_content clearfix">(.*?)</div>'

p = p.replace('\n','\r\n')

if lock.acquire():

for name, context in re.findall(p, html):

context = context.replace("'", '\\\'')

context = context.replace('"', '\\\"')

sql = r"insert into tieba (url,name,context) values ('%s','%s','%s')" % (url, name, context)

cursor.execute(sql)

cursor.execute("update url set status = 2 where url = '" + url + "'")

print "√"

except Exception,msg:

lock.release()

if lock.acquire():

cursor.execute("update url set status = -1 where url = '" + url + "'")

print "x:"+str(msg)

finally:

lock.release()

def start():

threads = []

for i in range(threadNum):

t = threading.Thread(target=download_tie)

threads.append(t)

for i in range(threadNum):

threads[i].start()

for i in range(threadNum):

threads[i].join()

# 线程数

threadNum = 100

# url缓存,避免对数据库的频繁操作

maxURL = 10

lock = threading.Lock()

oldtime = time.time()

下载前3页的url

downloadURL("python",3)

start()

print "OK! spend time:"+ str(time.time()-oldtime)

cursor.close()

db.close()

发表评论

抢沙发~